In-Memory Caching: A performance Booster

PERFORMANCE

Deepak Jha

1/22/20252 min read

This post tries to elucidate the science behind the In-memory caching and why it is a game-changer in performance engineering.

Let’s look at Memory vs. Disk Speed

RAM access speed: ~100ns (nanoseconds)

SSD access speed: ~100µs (microseconds) ~1000x slower than RAM

HDD access speed: ~10ms (milliseconds) ~100,000x slower than RAM

Network latency (API/database call): ~10-100ms

These stats shows faster memory access (RAM) compared to disk or network reduces latency, there by making in-memory caching a crucial optimization for high-performance applications.

How Caching Works Internally?

Frequently accessed data is stored in RAM instead of querying databases or making expensive disk reads.

Works on Temporal Locality (recently used data is likely to be accessed again) and Spatial Locality (nearby data is likely to be accessed together).

Uses key-value stores (e.g., Redis, Memcached) or in-process memory (e.g., dictionaries in Python, HashMaps in Java).

Let’s look at the Use Cases where In-Memory caching improves performance-

Web Applications: Caching API responses to reduce database queries.

Databases: MySQL, PostgreSQL use buffer pools to cache frequently accessed data.

Machine Learning/AI: Storing intermediate computation results.

Gaming Applications: Caching player states, game assets for faster access.

E-commerce: Storing product details, user sessions, and cart data.

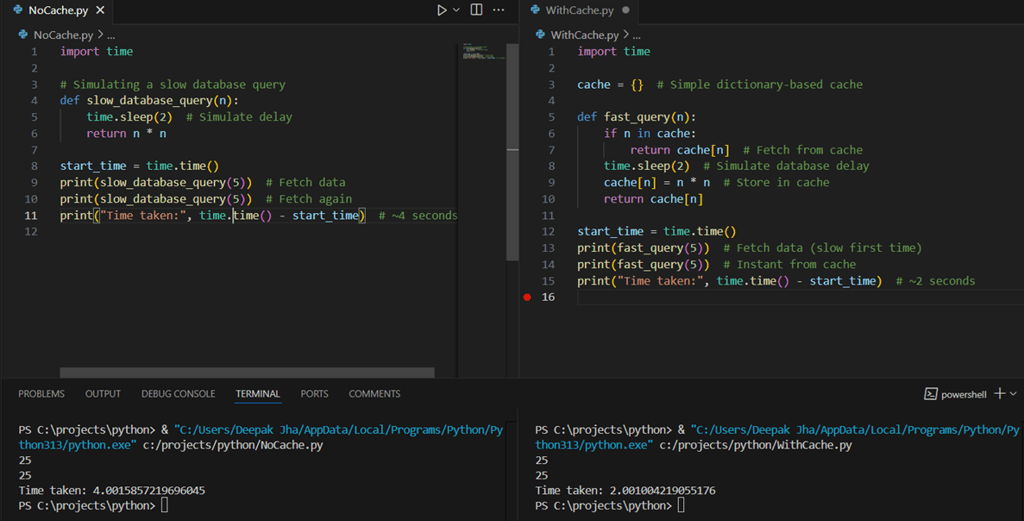

Let’s check out the impact of In-Memory caching with a small python implementation.

In this example we will compare the execution time of a program that fetches data from a simulated "slow database" versus using an in-memory cache.

Execution of NoCache.py in the left image shows slow overall time taken for execution because every call takes 2 seconds as the database is queried each time.

Execution of WithCache.py in the right image shows that the second call to database is instant because the data is fetched from memory!

It is a very simple demonstration of the caching benefits, however, real world solution needs to ensure that cache is properly synchronized with the data source otherwise applications may read stale or outdated data, leading to inconsistencies and potential data integrity issues. Implementing cache invalidation strategies (e.g., TTL, write-through, or write-behind caching) helps mitigate this risk.

Pros and Cons of In-Memory Caching

Some pros and cons of in-memory caching that an product developer or a performance engineer should consider are -

In-memory caching is a powerful tool for boosting performance, reducing latency, and optimizing resource usage. However, it requires careful handling of cache invalidation, memory management, and consistency.